A package for improving the practice of platform-aware programming in Julia.

It helps HPC package developers write code for different versions of computationally intensive functions (kernels) according to different assumptions about the features of the execution platform.

We define platform-aware programming as the practice of coding computationally intensive functions, called kernels, using the most appropriate abstractions and programming interfaces, as well as performance tuning techniques, to take better advantage of the features of the target execution platform. This is a well-known practice in programming for HPC applications.

Platform-aware programming is especially suitable when the developer is interested in employing heterogeneous computing resources, such as accelerators (e.g., GPUs, FPGAs, and MICs), especially in conjunction with multicore and cluster computing.

For example, suppose a package developer is interested in providing a specialized kernel implementation for NVIDIA A100 Tensor Core GPUs, meeting the demand from users of a specific cloud provider offering virtual machines with accelerators of this model. The developer would like to use CUDA programming with this device's supported computing capability (8.0). However, other users may require support from other cloud providers that support different accelerator models, from different vendors (for example, AMD Instinct™ MI210 and Intel® Agilex™ F-Series FPGA and SoC FPGA). In this scenario, the developer will face the challenge of coding and deploying for multiple devices. This is a typical platform-aware programming scenario where PlatformAware.jl should be useful, which is becoming increasingly common as the use of heterogeneous computing platforms increases to accelerate AI and data analytics applications.

PlatformAware.jl is aimed primarily at package developers dealing with HPC concerns, especially using heterogenous computing resources. We assume that package users are only interested in using package operations without being concerned about how they are implemented.

We present a simple example that readers may reproduce to test PlatformAware.jl features.

Consider the problem of performing a convolution operation using a Fast Fourier Transform (FFT). To do this, the user can implement a fftconv function that uses a fft function offered by a user-defined package called MyFFT.jl, capable of performing the FFT on an accelerator (e.g., GPU) if it is present.

using MyFFT

fftconv(X,K) = fft(X) .* conj.(fft(K)) This tutorial shows how to create MyFFT.jl, demonstrating the basics of how to install PlatformAware.jl and how to use it to create a platform-aware package.

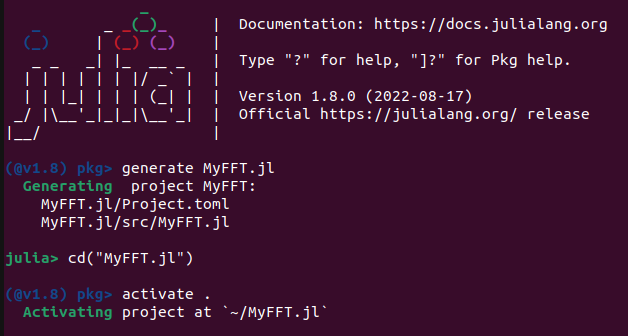

In the Julia REPL, as shown in the screenshot below, run ] generate MyFFT.jl to create a new project called MyFFT.jl, run 🔙cd("MyFFT.jl") to move to the directory of the created project, and ] activate . to enable the current project (MyFFT.jl) in the current Julia REPL session.

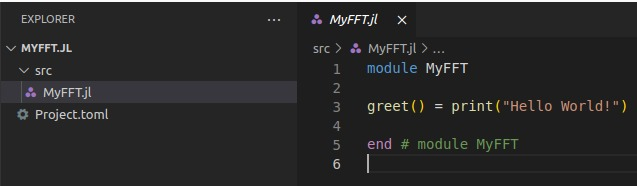

These operations create a standard "hello world" project, with the contents of the following snapshot:

Before coding the platform-aware package, it is necessary to add PlatormAware.jl as a dependency of MyFFT.jl by running the following command in the Julia REPL:

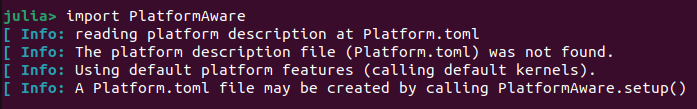

] add PlatformAwareNow, load the PlatfomAware.jl package (using PlatformAware or import PlatformAware) and read the output message:

Platform.toml is the platform description file, containing a set of key-value pairs, each describing a feature of the underlying platform. It must be created by the user running PlatformWare.setup(), which performs a sequence of feature detection operations on the platform.

Platform.toml is written in a human-editable format. Therefore, it can be modified by users to add undetected platform features or ignore detected features.

In order to implement the fft kernel function, we edit the src/MyFFT.jl file. First, we sketch the code of the fft kernel methods:

module MyFFT

import PlatformAware

# setup platorm features (parameters)

@platform feature clear

@platform feature accelerator_count

@platform feature accelerator_api

# Fallback kernel

@platform default fft(X) = ...

# OpenCL kernel, to be called

@platform aware fft({accelerator_count::(@atleast 1), accelerator_api::(@api OpenCL)}, X) = ...

# CUDA kernel

@platform aware fft({accelerator_count::(@atleast 1), accelerator_api::(@api CUDA)},X) = ...

export fft

endThe sequence of @platorm feature macro declarations specifies the set of platform parameters that will be used by subsequent kernel method declarations, that is, the assumptions that will be made to distinguish them. You can refer to this table for a list of all supported platform parameters. By default, they are all included. In the case of fft, the kernel methods are differentiated using only two parameters: accelerator_count and accelerator_api. They denote, respectively, assumptions about the number of accelerator devices and the native API they support.

The @platorm default macro declares the default kernel method, which will be called if none of the assumptions of other kernel methods declared using @platform aware macro calls are valid. The default kernel must be unique to avoid ambiguity.

Finally, the kernels for accelerators that support OpenCL and CUDA APIs are declared using the macro @platform aware. The list of platform parameters is declared just before the regular parameters, such as X, in braces. Their types denote assumptions. For example, @atleast 1 denotes a quantifier representing one or more units of a resource, while @api CUDA and @api OpenCL denote types of qualifiers that refer to the CUDA and OpenCL APIs.

The programmer must be careful not to declare kernel methods with overlapping assumptions in order to avoid ambiguities.

Before adding the code for the kernels, add the code to load their dependencies. This can be done directly by adding the following code to the src/MyFFT.jl file, right after import PlatformAware:

import CUDA

import OpenCL

import CLFFT

import FFTWAlso, you should add CUDA.jl, OpenCL.jl, CLFFT.jl, and FFFT.jl as dependencies of MyFFT.jl. To do this, execute the following commands in the Julia REPL:

] add CUDA OpenCL CLFFT FFTWNOTE: CLFFT.jl is not available on JuliaHub due to compatibility issues with recent versions of Julia. We're working with the CLFFT.jl maintainers to address this issue. If you have an error with the CLFFT dependency, point to our CLFFT.jl fork by running

add https://github.com/JuliaGPU/CLFFT.jl#master.

As a performance optimization, we can take advantage of platform-aware features to selectively load dependencies, speeding up the loading of MyFFT.jl. To do this, we first declare a kernel function called which_api in src/MyFFT.jl, right after the @platform feature declaration:

@platform default which_api() = :fftw

@platform aware which_api({accelerator_api::(@api CUDA)}) = :cufft

@platform aware which_api({accelerator_api::(@api OpenCL)}) = :clfftNext, we add the code for selective dependency loading:

api = which_api()

if (api == :cufft)

import CUDA

elseif (api == :clfft)

import OpenCL

import CLFFT

else # api == :fftw

import FFTW

endFinally, we present the complete code for src/MyFFT.jl, with the implementation of the kernel methods:

module MyFFT

using PlatformAware

@platform feature clear

@platform feature accelerator_count

@platform feature accelerator_api

@platform default which_api() = :fftw

@platform aware which_api({accelerator_count::(@atleast 1), accelerator_api::(@api CUDA)}) = :cufft

@platform aware which_api({accelerator_count::(@atleast 1), accelerator_api::(@api OpenCL)}) = :clfft

api = which_api()

@info "seleted FFT API" api

if (api == :cufft)

using CUDA; const cufft = CUDA.CUFFT

elseif (api == :clfft)

using OpenCL

using CLFFT; const clfft = CLFFT

else # api == :fftw

using FFTW; const fftw = FFTW

end

# Fallback kernel

@platform default fft(X) = fftw.fft(X)

# OpenCL kernel

@platform aware function fft({accelerator_count::(@atleast 1), accelerator_api::(@api OpenCL)}, X)

T = eltype(X)

_, ctx, queue = cl.create_compute_context()

bufX = cl.Buffer(T, ctx, :copy, hostbuf=X)

p = clfft.Plan(T, ctx, size(X))

clfft.set_layout!(p, :interleaved, :interleaved)

clfft.set_result!(p, :inplace)

clfft.bake!(p, queue)

clfft.enqueue_transform(p, :forward, [queue], bufX, nothing)

reshape(cl.read(queue, bufX), size(X))

end

# CUDA kernel

@platform aware fft({accelerator_count::(@atleast 1), accelerator_api::(@api CUDA)},X) = cufft.fft(X |> CuArray)

export fft

end # module MyFFTTo test fft in a convolution, open a Julia REPL session in the MyFFT.jl directory and execute the following commands:

NOTE: If you receive an ambiguity error after executing fftconv, don't panic ! Read the next paragraphs.

import Pkg; Pkg.activate(".")

using MyFFT

function fftconv(img,krn)

padkrn = zeros(size(img))

copyto!(padkrn,CartesianIndices(krn),krn,CartesianIndices(krn))

fft(img) .* conj.(fft(padkrn))

end

img = rand(Float64,(20,20,20)) # image

krn = rand(Float64,(4,4,4)) # kernel

fftconv(img,krn) The fft kernel method that corresponds to the current Platform.toml will be selected. If Platform.toml was not created before, the default kernel method will be selected. The reader can consult the Platform.toml file to find out about the platform features detected by PlatformAware.setup(). The reader can also see the selected FFT API in the logging messages after using MyFFT.

By carefully modifying the Platform.toml file, the reader can test all kernel methods. For example, if an NVIDIA GPU was recognized by PlatformAware.setup(), the accelerator_api entry in Platform.toml will probably include the supported CUDA and OpenCL versions. For example, for an NVIDIA GeForce 940MX GPU, accelerator_api = "CUDA_5_0;OpenCL_3_0;unset;unset;OpenGL_4_6;Vulkan_1_3;DirectX_11_0". This may lead to an ambiguity error, as multiple dispatch will not be able to distinguish between the OpenCL and CUDA kernel methods based on the accelerator_api parameter alone. In this case, there are two alternatives:

- To edit Platform.toml by setting CUDA or OpenCL platform type (e.g.

CUDA_5_0orOpenCL_3_0) tounsetin theaccelerator_apientry, making it possible to select manually the kernel method that will be selected; - To modify the CUDA kernel signature by including, for example,

accelerator_manufacturer::NVIDIAin the list of platform parameters, so that NVIDIA GPUs will give preference to CUDA and OpenCL will be applied to accelerators of other vendors (recommended).

Therefore, we suggest the following general guideline for package developers who want to take advantage of PlatformWare.jl.

-

Identify the kernel functions, that is, the functions with high computational requirements in your package, which are the natural candidates to exploit parallel computing, acceleration resources, or both.

-

Provide a default (fallback) method for each kernel function, using the

@platform defaultmacro. -

Identify the target execution platforms to which you want to provide specialized methods for each kernel function. You can choose a set of execution platforms for all kernels, or you can select one or more platforms for each kernel independently. For helping your choice, look at the following information sources:

- the table of supported platform parameters, which will help you to know which assumptions PlatformAware.jl already allow you to make about the target execution platorm;

- the database of supported platform features, where the features of the models of processors and accelerators that are currently suported by PlatformAware.jl are described:

- AMD accelerators and processors;

- Intel accelerators and processors;

- NVIDIA accelerators.

-

For each platform you select, define a set of assumptions about its features that will guide your implementation decisions. In fact, it is possible to define different assumptions for the same platform, leading to multiple implementations of a kernel for the same platform. For example, you might decide to implement different parallel algorithms to solve a problem according to the number of nodes and the interconnection characteristics of a cluster.

-

Provide platform-aware methods for each kernel function using the

@platform awaremacro. -

After implementing and testing all platform-aware methods, you have a list of platform parameters that were used to make assumptions about the target execution platform(s). You can optionally instruct the PlatformAware.jl to use only that parameters by using the

@platform featuremacro.

F. H. de Carvalho Junior, A. B. Dantas, J. M. Hoffiman, T. Carneiro, C. S. Sales, and P. A. S. Sales. 2023. Structured Platform-Aware Programming. In XXIV Symposium on High-Performance Computational Systems (SSCAD’2023) (Porto Alegre, RS). SBC, Porto Alegre, Brazil, 301–312. https://sol.sbc.org.br/index.php/sscad/article/view/26529

Contributions are very welcome, as are feature requests and suggestions.

Please open an issue if you encounter any problems.

PlatformAware.jl is licensed under the MIT License